Patrick Kinner shares notes from a session he attended at the recent American Evaluation Association conference in New Orleans:

Evaluation is such a unique and diverse discipline, and most evaluation work is done in conjunction with people running programs rather than other evaluators, so to have 2500 other people doing the same work I do attending the same conference was an enriching experience.

Aside from the professional characteristics that define evaluation; participatory collaboration and design sessions, commitment to rigorous data collection, and an emphasis on using data for program and service improvement, there was something else striking on display at the conference. Evaluation and the stories evaluation data can tell are valuable tools for promoting social equity and social justice.

Nowhere was that more evident than the wonderful session I attended led by Elizabeth McGee, the Director of Systems Change Initiatives and Evaluation at Leap Consulting, https://www.

Project Conceptualization/ Needs Identification

Harm: Limited community engagement during project inception, arbitrary start/end dates, and calling humans “subjects” or “populations” is dehumanizing

Heal: Include “community trust” as a deliverable, allow success to be community-defined, and compensate for involvement in the design phase

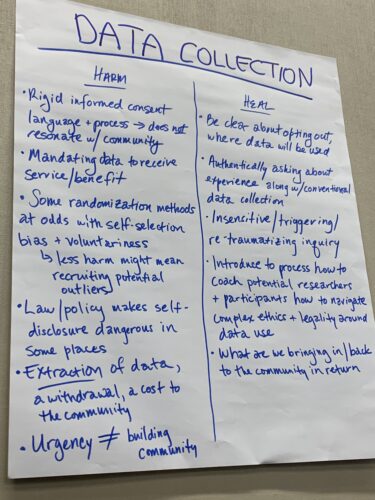

Data collection

Harm: Rigid informed consent, availability of data affecting participation, and self-disclosure of data can be risky for many

Heal: Allow opting out of all data collection, sharing the data back to participants, include data on perceptions of involvement

Methodology

Harm: Only asking about the project-relevant experience rather than actual human experience, study designer definitions of evidence separate from participant definitions, quantitative data being valued over qualitative

Heal: Consider community’s past experiences before beginning data collection, define rigor locally, and add community goals when they differ from project goals

Data dissemination and use

Harm: reporting formats dictated by the funder, results are decontextualized, the impact is driven by funder need rather than community need

Heal: Results are shared with the community first and are the start of an ongoing conversation, context of findings provided by community members, disseminate the results to all relevant groups, not just those connected to the evaluation project